ChatGPT Shopping Is Here- And Why It Matters

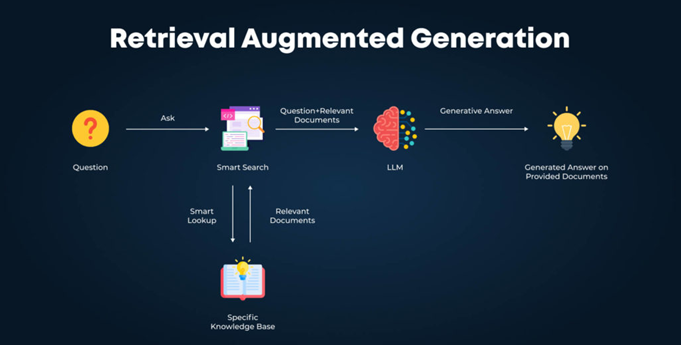

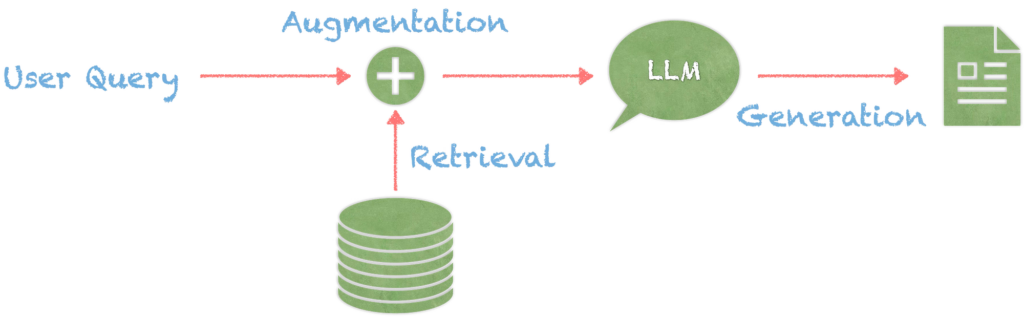

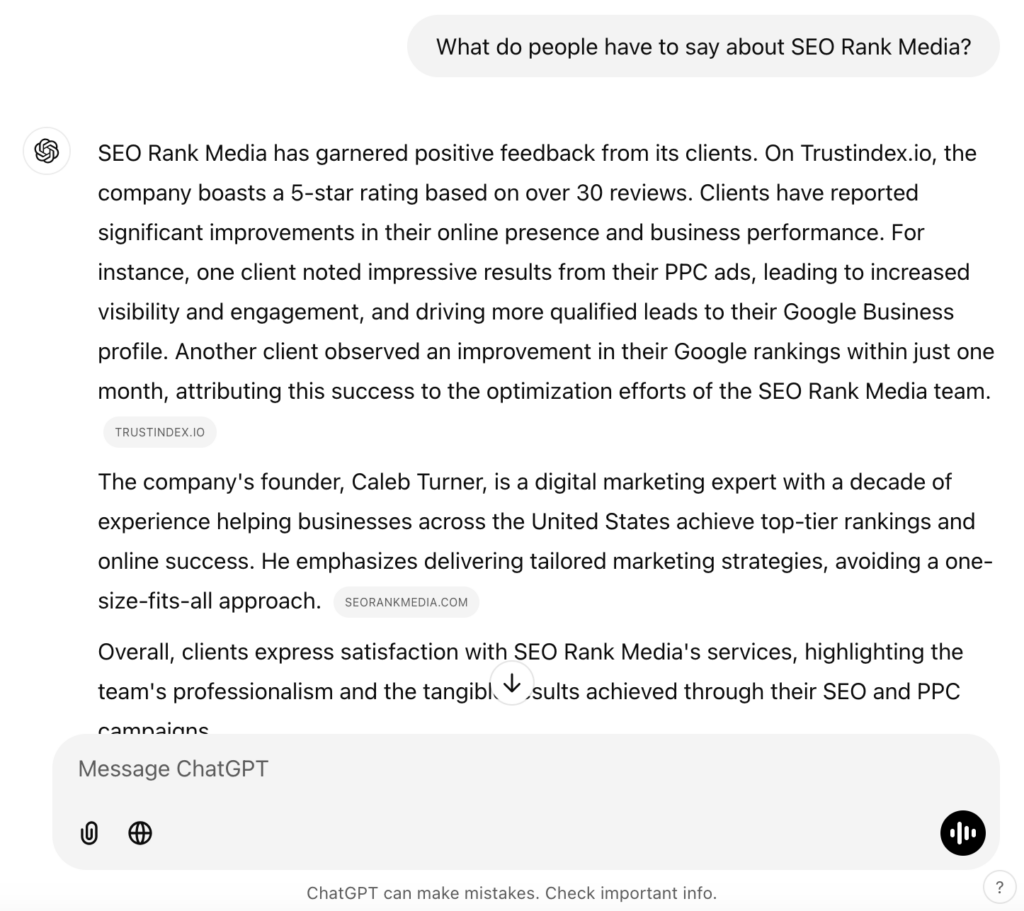

OpenAI’s Agentic Commerce approach lets ChatGPT understand your product catalog, present your items in shopping conversations, and, if you’re an approved merchant, complete purchases with Instant Checkout. You participate by providing a structured Product Feed and, if you opt in to purchases, lightweight checkout endpoints and a payment path. The feed is the assistant’s structured source of truth for your products; the spec accepts CSV, TSV, XML, or JSON and allows updates as often as every fifteen minutes so pricing and inventory stay fresh. For brands working with agencies like SEO Rank Media, this means keeping a clean, regularly refreshed catalog export and wiring up the minimal checkout endpoints when you’re ready.

Where this diverges from the traditional search mindset is intent and format. The goal isn’t just to match a query; it’s to help the assistant reason about fit, trade-offs, and next best products in a conversation. That’s why the feed includes fields for relationships (accessories, bundles), review content, and media like video and 3D models. It’s also why there are per-product control flags, so you can allow discovery without enabling checkout, or enable checkout only where you’re operationally confident.

Momentum matters here, too. OpenAI has publicly described merchant onboarding in the United States, Etsy availability inside ChatGPT, Shopify support coming, and a headline partnership with a major national retailer to enable shopping via ChatGPT. In other words, this is not a lab demo; it’s becoming a mainstream retail surface.

What’s actually different from a Google Shopping feed

Google Merchant Center’s base product feed is mature and widely adopted. It supports identifiers, variant grouping, sale windows, and even 3D model links for certain categories. It also enforces parity between the feed and your landing pages; Google crawls and compares price and availability, and it can disapprove products for mismatches.

OpenAI’s feed is built for a conversational assistant. It encourages you to author narrative descriptions, include related products and compatibility, and attach reviews and Q&A inline so the model can quote real customer language to address objections. Regional price and availability can live right in the same product record. And visibility and checkout are toggled per SKU via control flags, giving you more granular participation.

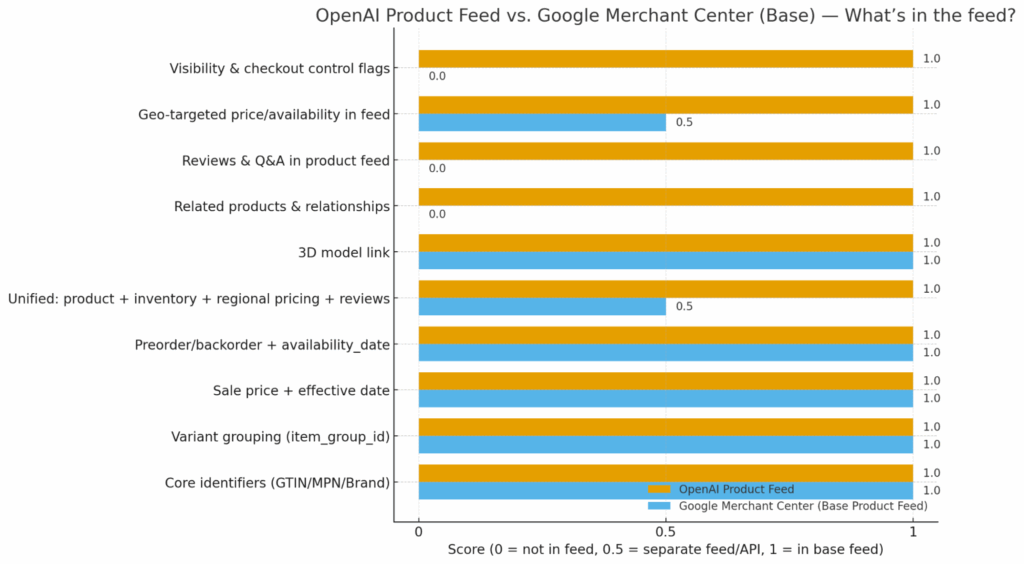

Comparison chart: what’s in the base feed vs. what requires extras

Legend: 1 = in the base product feed; 0.5 = supported via a separate feed or API; 0 = not supported

(The chart shows both feeds include core identifiers, variants, sales windows, and preorders. Google handles product ratings and regional inventory through separate mechanisms, so those are shown as partial support. OpenAI’s base feed includes reviews and Q&A inline, explicit related-product relationships, geo-overrides within the same record, and per-SKU participation flags for search and checkout.)

| Capability / Attribute | OpenAI Product Feed (base) | Google Merchant Center (base) | Google Separate Mechanism (if any) | Notes |

| Core identifiers (GTIN, MPN, brand) | Yes | Yes | — | Both ecosystems rely on standard identifiers for matching and deduping. |

| Variant grouping (parent/child variants) | Yes | Yes | — | Use a stable parent grouping to present color/size options as one product. |

| Sale price + effective date window | Yes | Yes | — | Always pair sale price with its active start/end window. |

| Preorder / backorder + availability date | Yes (preorder date required) | Yes (preorder/backorder with date) | — | Both require a future availability_date when you mark preorder; GMC also supports backorder. |

| Inventory quantity | Yes (inline) | No | Yes (Content/Merchant API) | GMC uses inventory endpoints (incl. regionalinventory) rather than a quantity in the base feed. |

| Regional price and availability | Yes (inline geo overrides) | No (in base) | Yes (regionalinventory via Content/Merchant API) | Google manages regional overrides through its API and regions service. |

| Reviews & ratings in product feed | Yes (inline review stats / Q&A) | No (in base) | Yes (separate Product Ratings feed) | GMC ingests ratings via a distinct reviews feed; OpenAI accepts review data in the product feed. |

| Related products & relationships (accessories, substitutes, “often_bought_with”) | Yes (inline relationships) | No | — | OpenAI exposes explicit relationship fields to power conversational cross-sell. |

| 3D model link | Yes (model_3d_link) | Yes (virtual_model_link) | — | GMC’s 3D attribute is currently scoped; OpenAI lists 3D as a media field in the base feed. |

| Video link in base feed | Yes (video_link) | Limited (not a standard base attribute) | — | OpenAI’s base spec includes video URLs; Google handles rich media through other paths, not the simple base feed. |

| Per-SKU discovery & checkout flags | Yes (enable_search,enable_checkout) | No | — | OpenAI provides channel-specific participation controls per product. |

| Site-parity enforcement (price/availability) | Not described as a crawl-enforced requirement | Yes (site ↔ feed parity required) | — | GMC explicitly checks for price/availability mismatches and can disapprove items. |

Why SEOs and growth teams should treat this as a separate feed

First, narrative and semantics matter. The assistant isn’t just matching strings—it’s explaining. Well-written product descriptions, explicit benefits, trade-offs, and voice-of-customer snippets give it better material to work with. You can also declare relationships such as “required_part,” “often_bought_with,” or “compatible_with,” which helps the assistant build a smarter basket in one conversation.

Second, unification reduces friction. Instead of juggling separate files for product, reviews, and regional overrides, you can put complete context in one feed. That simplifies your pipeline and ensures the assistant always has the full picture.

Third, freshness is a visibility lever. If you update price or stock, you can push those changes quickly so the assistant never promotes items that are out of stock or mispriced. Frequent updates are supported and expected.

Fourth, there are real channel differences. Google requires strict parity between feed and website. OpenAI’s guidance, by contrast, centers the feed as the structured source of truth for conversational use. Treat them as distinct channels with distinct goals and editorial constraints, not a single file you copy and paste.

What this looks like operationally (a practical loop)

A typical team sets up a fifteen-minute job that builds a single authoritative export. The job joins product data with inventory, pricing, regional overrides, media, relationships, and review snippets or aggregates. The exporter validates key rules: currency codes, sale windows, working image and video links, and preorder dates when applicable. It then serializes to CSV or JSON and posts the file over encrypted HTTPS to the allow-listed endpoint. On success, it archives the file and logs the record count and checksum; on failure, it retries with backoff and raises an alert. Many teams begin with full refreshes and later shift to delta exports once volume grows.

To phase in purchases, keep discovery enabled while checkout is off, then enable checkout per SKU once you’re confident in stock accuracy, shipping options, and tax logic. Payments are delegated to your processor; the assistant renders the UI, but you authorize, capture, settle, and refund on your rails. If this sounds complicated, reach out to us and we’ll help you.

Field-by-field: what each attribute means (plain English)

- id: Your stable internal SKU or product identifier. Do not recycle it; this anchors updates and prevents duplicates.

- title: The product name customers expect to see. Keep it specific and readable.

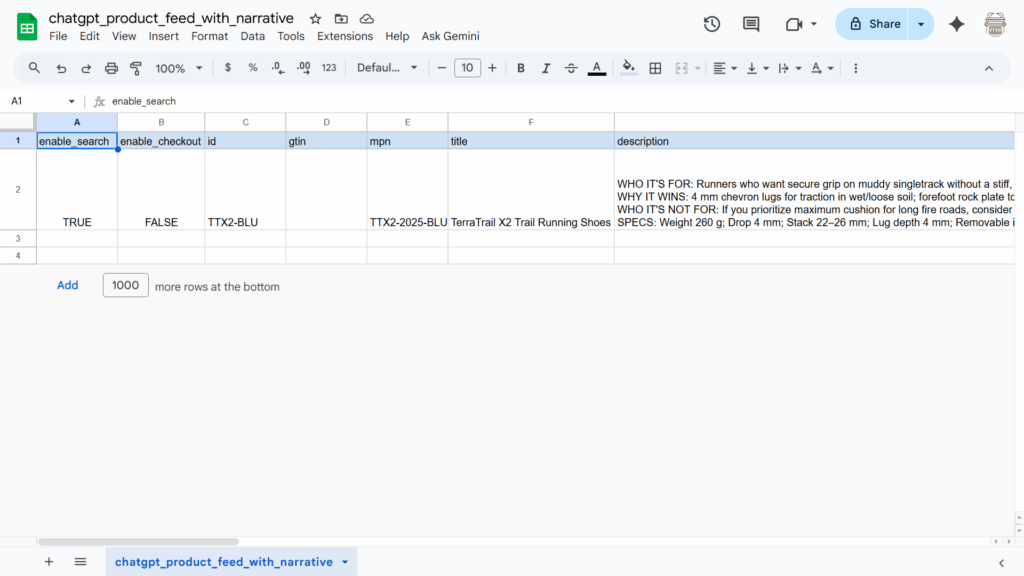

- description: A clear, scannable explanation of what the product is, what it does, who it’s for, and why it’s different. Treat this like conversion copy, not a keyword dump.

- brand: The brand or manufacturer. Consistency here improves matching and trust.

- gtin: The global trade item number, such as a UPC or EAN. When available, this is the most reliable cross-system identifier for exact product matches and review association.

- mpn: The manufacturer part number. Use it with brand if you lack a GTIN.

- image_link and additional_image_link: The primary product image and any extra angles or lifestyle shots. Assets should be accurate for the variant (color, size) and high quality.

- video_link: A product video for demonstrations, comparisons, or unboxing. Helpful for the assistant to surface richer explanations.

- model_3d_link or virtual_model_link (terminology varies by platform): A 3D asset the assistant or storefront can reference for interactive views or augmented reality in supported categories.

- price: The regular price with currency code.

- sale_price and sale_price_effective_date: A promotional price and the start/end window when it applies. Always pair these so the assistant knows when the discount is valid.

- availability: Stock status such as in_stock, out_of_stock, preorder, or backorder (terminology varies by platform).

- availability_date: When a preorder or backorder is expected to ship. Required when you mark something as preorder.

- inventory_quantity: Your current stock count. In OpenAI’s feed this helps the assistant avoid recommending something you can’t fulfill.

- condition: Usually “new,” but other values exist for refurbished and used goods where allowed.

- item_group_id: The parent code that ties variants together. Use the same value across color and size variants so the assistant can show a single product with selectable options.

- color / size / size_system / gender: Variant attributes, especially important in apparel and footwear. Keep naming consistent across the group.

- shipping: Your shipping methods, regions, and prices in a structured format. Use it to expose free-shipping thresholds and expedited options where they help conversion.

- delivery_estimate: When the customer can expect the item to arrive. The assistant can use this to answer timing questions.

- geo_price and geo_availability: Region-specific overrides for price and stock. Handy for cross-border or multi-state operations with local pricing or inventory pools.

- seller_name / seller_url / policy fields: Merchandising and trust elements—your store name, privacy policy, terms, and return policy. If you enable checkout, many of these become required.

- product_review_count / product_review_rating: A quick summary of review volume and score to aid ranking and give the assistant context.

- raw_review_data or q_and_a: Snippets of customer reviews or curated questions and answers. This is powerful: the assistant can quote or paraphrase real buyer language to resolve objections.

- related_products and relationship_type: Cross-sell and compatibility. Examples include required accessories, compatible parts, alternatives, or bundles. This is how you encode the buying graph you want the assistant to use.

- enable_search: Whether a product is eligible to surface in ChatGPT shopping results.

- enable_checkout: Whether the product is eligible for in-chat purchasing. These two flags let you stage participation without committing everything at once.

- created_at / updated_at: Timestamps for auditing and freshness logic. Keep them accurate to make incremental updates reliable.

A short, practical checklist

- Maintain a separate OpenAI feed so you can write conversational descriptions, include reviews and Q&A inline, define relationships, and set participation flags per SKU.

- Keep identifiers clean: GTIN where available; otherwise brand plus MPN; never recycle id.

- Pair sale_price with sale_price_effective_date; include availability_date for preorders; validate currency codes.

- Update whenever product, price, or availability changes—fifteen-minute exports are supported and recommended.

- Start with discovery enabled and checkout off; enable checkout per SKU once stock, taxes, and shipping are proven.

Closing thought

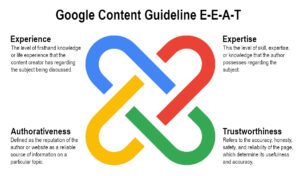

Previously, your shopping feed was a supporting document that needed to line up with your PDPs and help an ad platform target the right queries. In the agentic era, your feed is the main character: it’s the content the assistant reads, reasons over, and uses to recommend the right bundle, answer the buyer’s objections, and—in many cases—complete the order. That’s why this isn’t “just SEO.” It’s the connective tissue between product truth, persuasive storytelling, and a new, conversational shopping surface.